The Ultimate Guide to LLM Leaderboards : Part 1

As the demand for AI capabilities continues to grow, the importance of reliable and comprehensive leaderboards will only increase.

Hi folks! 👋 Welcome to the AI Notebook! 📙

I'm KP, your guide through the ever-evolving world of artificial intelligence. Each week, I curate and break down the most impactful AI news and developments to help tech enthusiasts and business professionals like you stay ahead of the curve. Whether you're a seasoned AI pro or just AI-curious, this newsletter is your weekly dose of insights, analysis, and future-forward thinking. Ready to dive in? Let's explore this week's AI story!

"Leaderboard, leaderboard on the web, Which AI model is the brightest of all?"

The Large Language Model (LLM) landscape is constantly evolving, with new models emerging at a rapid pace. This makes it difficult for business leaders and technologists to keep up with the latest advancements and identify the most effective models for their needs. LLM leaderboards play a crucial role in addressing this challenge.

LLM leaderboards are platforms that evaluate and rank different LLMs based on a set of predefined metrics. These metrics can include accuracy, speed, efficiency, cost, and others. The leaderboards have become the de facto scoreboards of the AI world, offering a quantitative glimpse into the capabilities of these sophisticated models. They serve as crucial benchmarks, allowing stakeholders to gauge performance, track progress, and make informed decisions about AI integration and investment.

In this part, we'll dive into LLM leaderboards that are setting the standard in the industry. From assessing linguistic nuance to measuring problem-solving capabilities, these leaderboards offer valuable insights into the strengths and limitations of today's most advanced AI models.

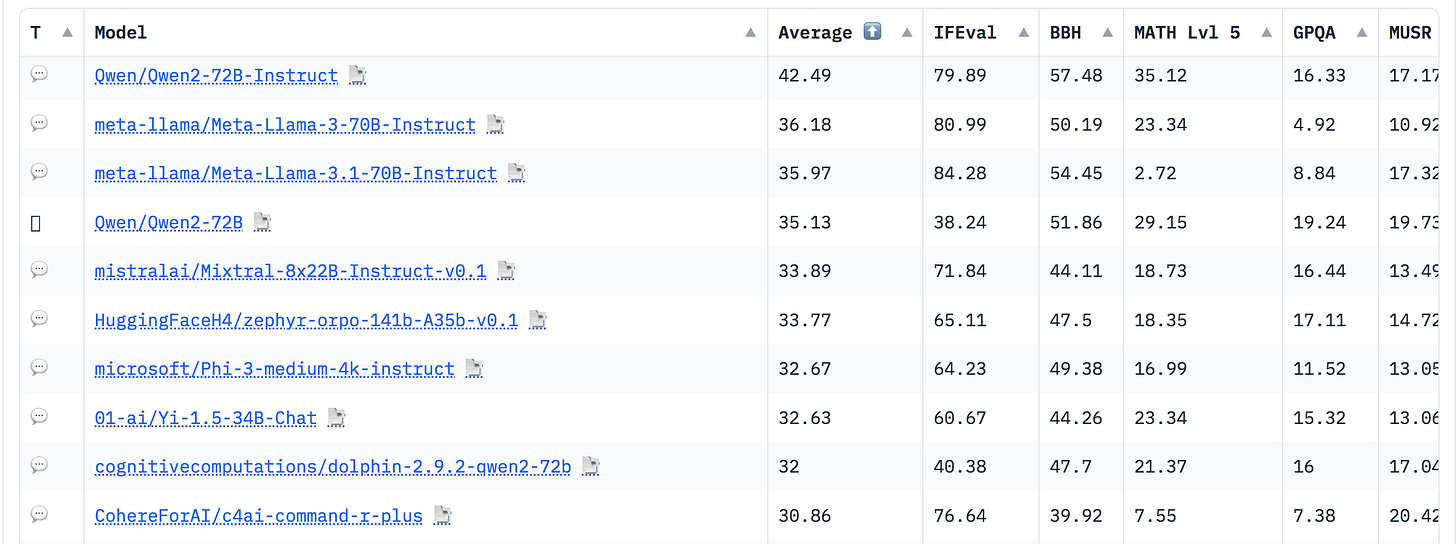

Open LLM Leaderboard v2

The Open LLM Leaderboard, maintained by community-driven platform HuggingFace, focuses on evaluating open-source language models across a variety of tasks, including language understanding, generation, and reasoning. HuggingFace upgraded the leaderboard to version 2 realising the need for a harder and stronger evaluations. The version 1 ran from April 2023 to June 2024 which used 6 benchmarks namely, ARC, HellaSwag, MMLU, TruthfulQA, and Winogrande.

Key Metrics and Evaluation Methodology:

Uses a set of 6 new benchmarks including IFEval, BBH, MATH Lvl 5, GPQA and MuSR.

Each benchmark output score is normalized scores between the random baseline (0 points) and the maximal possible score (100 points) and then average of all normalized scores is computed to get the final score and rankings.

Hugging Face prioritizes running models with the most community votes first.

Strengths:

Comprehensive coverage of open-source models, community-driven, and transparent evaluation methodology.

Covers a wide range of linguistic and reasoning tasks.

Regular updates with new models and results.

Limitations:

Reliance on open-source models might limit the scope of comparison.

May not fully capture real-world performance or specialized capabilities.

The leaderboard has a filter category called "Maintainer's Highlight” to prioritize the most beneficial and high-quality models for evaluation.

Use cases:

If you are interested in model knowledge, relevant evaluations will be MMLU-Pro and GPQA. IFEval targets chat capabilities.

MATH-Lvl5, as name suggests, focuses on math capabilities.

MuSR is particularly worth noting for long-context models.

MMLU-Pro and BBH correlate well with human preference.

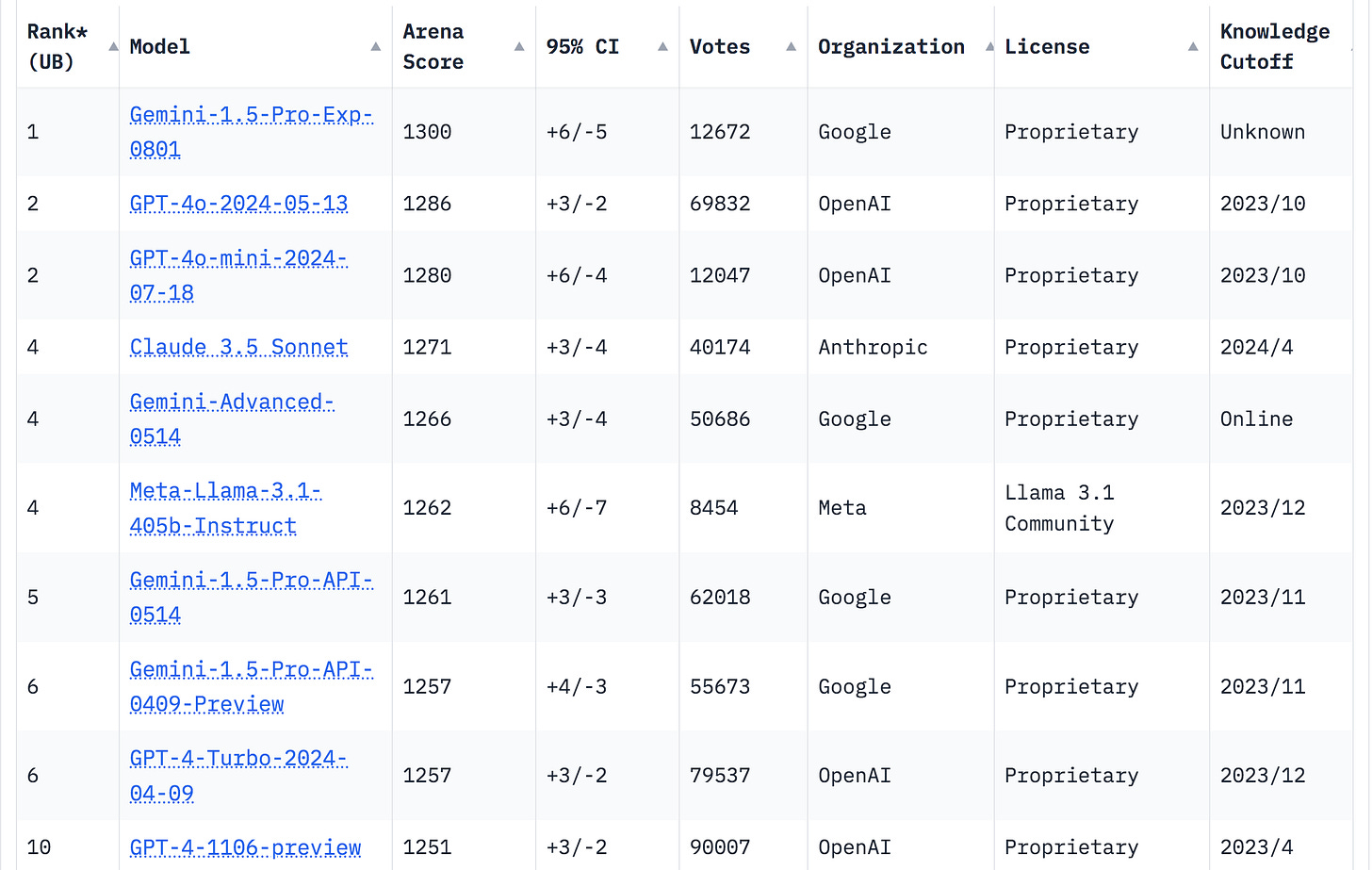

LMSYS Chatbot Arena Leaderboard

LMSYS Chatbot Arena primarily focuses on evaluating chatbot capabilities. It is a crowdsourced platform for ranking LLMs based on human preferences.

Key metrics and evaluation methodology:

Human users directly compare model responses from two models and vote the one which is best. The results are aggregated using the Bradley-Terry model (classical statistical method for ranking based on paired comparisons) to produce Elo-style ratings, which is a widely-used rating system in chess and other competitive games.

Strengths:

Direct human evaluation provides valuable insights into user preferences

Leaderboard is constantly updated with new models and comparisons.

Limitations:

Subjective nature of human judgment, potential biases in user preferences.

Use cases:

Understanding user perceptions of chatbot capabilities, identifying competitive models, and improving chatbot performance based on user feedback.

Guides the development and improvement of virtual assistants.

The new Multimodal Chatbot Arena is also available now for users to chat and compare vision-language models.

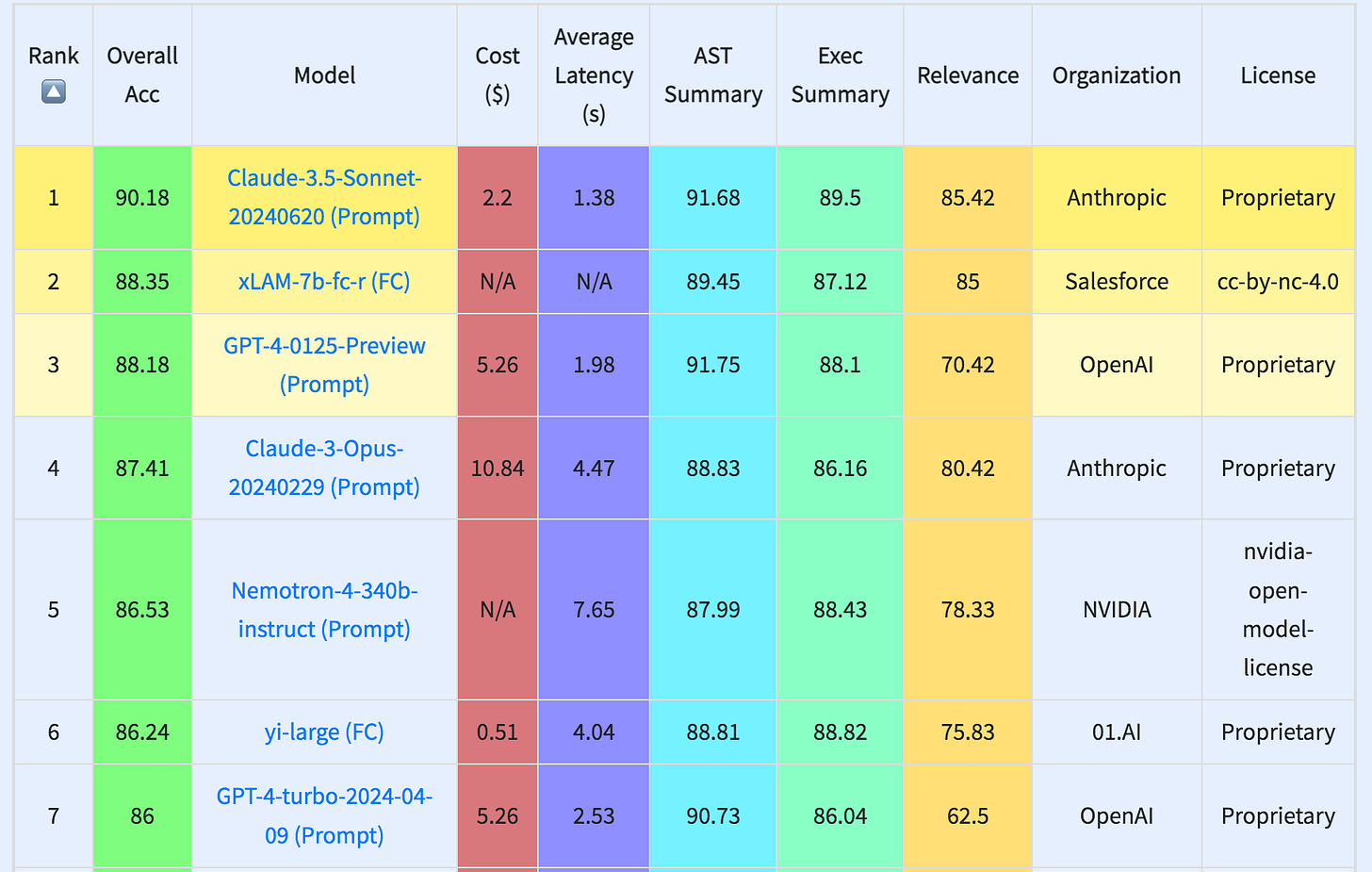

Berkeley Function-Calling Leaderboard (BFCL)

The Berkeley Function-Calling leaderboard, maintained by UC Berkeley, specifically evaluates LLMs on their ability to interpret and call functions (or tools) across multiple programming languages and application domains, a crucial skill for AI assistants, coding applications and agents. It is also called as Berkeley Tool Calling Leaderboard.

Key Metrics and Evaluation Methodology:

Evaluates the model’s performance in scenarios requiring simple, multiple and parallel function calls by two popular methods - Abstract Syntax Tree (AST) Evaluation and Executable Function Evaluation.

Uses function relevance detection scenarios to assess the model’s ability to recognize when a provided function is unsuitable for a given question and to appropriately provide an error message.

The leaderboard ranks the models on their ability to generate correct function calls, including selecting the appropriate function and handling multiple calls. It also focuses on cost and latency (read the formulas here).

It uses a comprehensive dataset comprising 2,000 question-function-answer pairs. This includes 100 Java, 50 JavaScript, 70 REST API, 100 SQL and 1,680 Python questions.

Strengths:

Highly relevant for enterprise agentic workflows, AI coding assistants and automated code generation.

Focuses on practical code generation tasks relevant to software development.

Evaluates both comprehension and generation capabilities.

Limitations:

Narrow focus on function calling may not reflect broader language understanding.

May not capture the nuances of real-world programming scenarios.

Use Cases:

Identifying LLMs suitable for tasks requiring function calling

Developing AI-powered coding assistants, automating API integration and software development processes.

Enhancing natural language interfaces for technical systems and model's ability to interact with code.

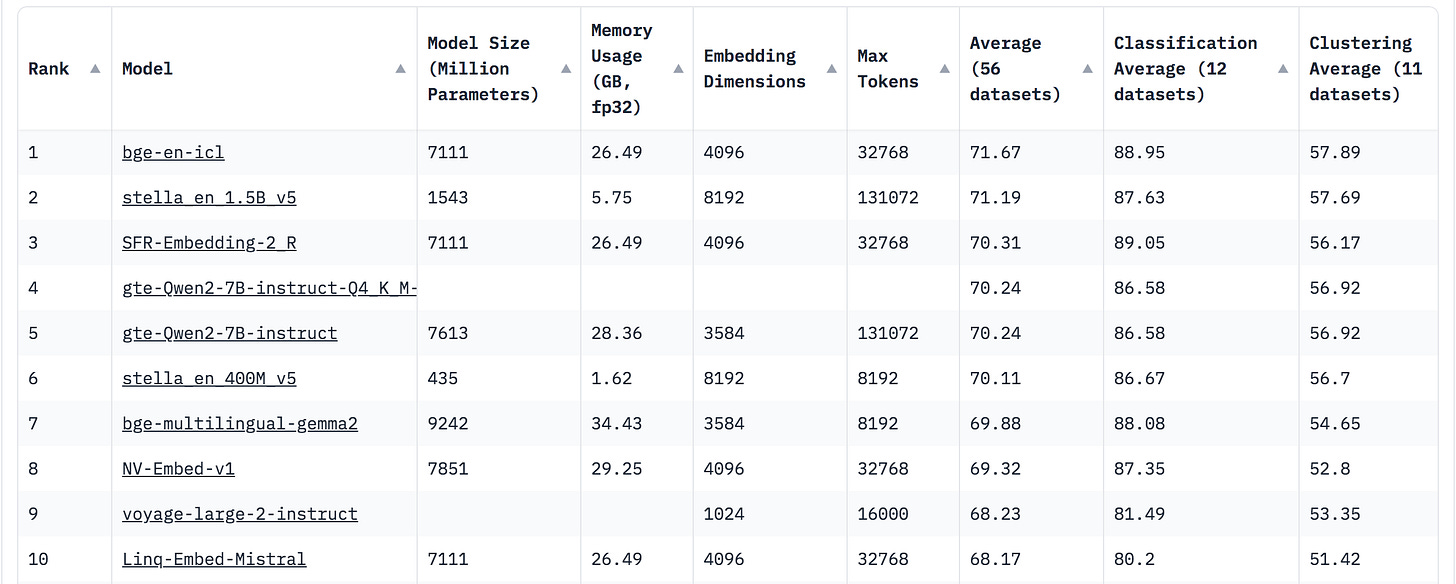

Massive Text Embedding Benchmark (MTEB) Leaderboard

The Massive Text Embedding Benchmark (MTEB) Leaderboard is a widely recognized benchmark for evaluating text embedding models. It assesses embedding quality across a diverse range of tasks and languages, providing a comprehensive evaluation of model performance in various natural language understanding scenarios.

Text embeddings are a set of numbers that represent what the text means and capture the semantics of the text. For more details, read this article by Cohere.

Text embedding models convert the textual data into numerical vectors that capture the semantic meaning and context of the words they represent.

Key Metrics and Evaluation Methodology:

Encompasses 8 different embedding tasks, covering 58 datasets and 112 languages. The tasks include classification, clustering, pair classification, reranking, retrieval, STS, bitext mining and summarization.

Uses task-specific metrics aggregated into a final score.

Strengths:

Comprehensive evaluation of embedding models, includes both sentence-level and paragraph-level datasets.

Covers a wide range of practical NLP tasks.

Limitations:

Focused solely on embedding models, not full language models.

May not reflect performance in highly specialized domains.

Key Finding:

No single model consistently outperforms others across all tasks and datasets, highlighting each model's strengths and weaknesses depending on the specific task and data. (So, look for specific task scores while selection!)

Highlights the scope of building for versatile and robust text embedding techniques.

Use Cases:

Selecting optimal embedding models for quick and accurate information retrieval systems, improving knowledge discovery and management.

In RAG systems, improve the retrieval of relevant passages from a corpus, providing context for language models.

Enhancing recommendation engines and similarity-based applications.

Hughes Hallucination Evaluation Model (HHEM) leaderboard

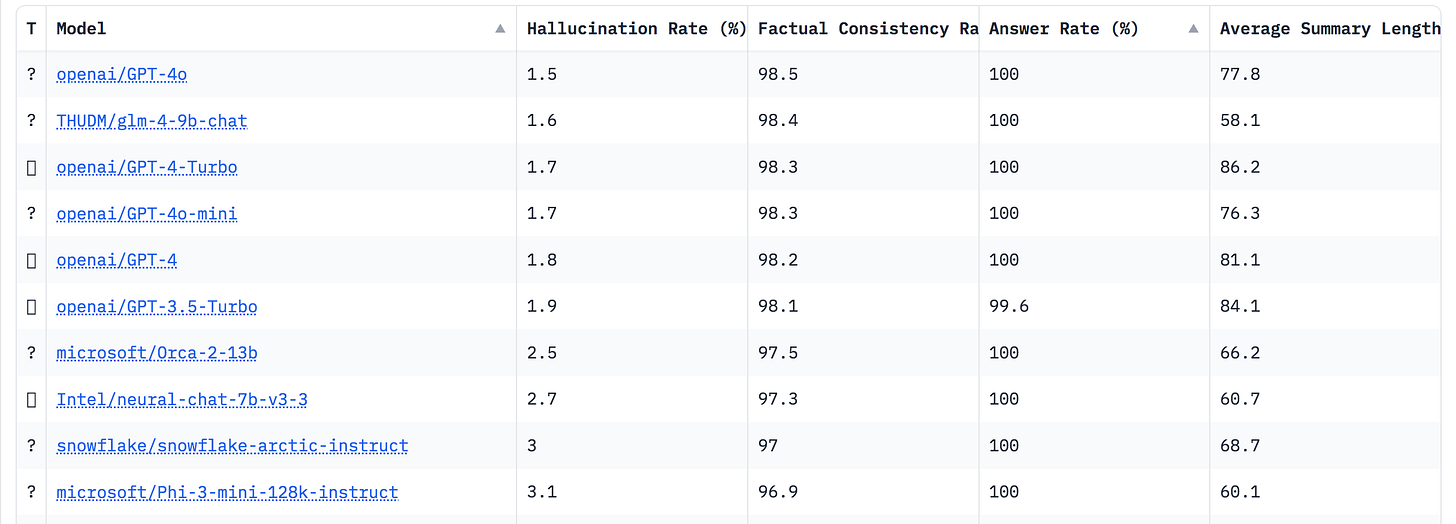

The Hughes Hallucination Evaluation Model (HHEM) Leaderboard, developed by Vectara, focuses on evaluating the frequency of hallucinations in document summaries generated by LLMs. It focuses on evaluating the factual consistency and reliability of AI-generated summaries.

Hallucinations refer to inaccuracies or fabrications generated by LLMs that are not present in the source material. The leaderboard aims to identify and assess these hallucinations to improve the reliability and accuracy of model-generated summaries.

Key Metrics and Evaluation Methodology:

Uses Vectara's HHEM-2.1 hallucination evaluation model to measure rankings on the leaderboard.

For each source document and LLM-generated summary pair, HHEM outputs a hallucination score between 0 (complete hallucination) and 1 (factual consistency).

Evaluation dataset consists of 1006 documents from multiple public datasets, primarily the CNN/Daily Mail Corpus. Summaries are generated for each document and hallucination scores are computed for each document-summary pair.

It uses Hallucination Rate (percentage of summaries with a hallucination score below 0.5) as a metric. Other metrics include Factual Consistency Rate (complement of the hallucination rate), Answer Rate and Average Summary Length.

Strengths:

Focuses on a critical aspect of LLM performance: factual consistency in summarization tasks.

Provides a quantitative measure of hallucination, a common concern in AI-generated content.

Includes multiple metrics to give a comprehensive view of model performance.

Limitations:

Primarily focused on summarization tasks, may not reflect performance in other areas.

The binary threshold for hallucination (0.5) may not capture nuanced differences in factual consistency.

Use Cases:

Compare and select models with lower hallucination rates for more reliable automated content creation.

Choose LLMs that maintain high factual consistency for document summarization tasks.

Evaluate potential risks associated with using AI-generated content in sensitive or high-stakes contexts.

Guide research and development efforts to improve LLM factual consistency and reduce hallucinations.

As the demand for advanced AI capabilities continues to grow, the importance of reliable and comprehensive leaderboards will only increase. By understanding the strengths and limitations of different platforms, businesses can make informed decisions about LLM adoption and development.

This week we took a closer look at some of the most prominent LLM leaderboards:

Open LLM Leaderboard v2 - go-to scoreboard for open-source language models

LMSYS Chatbot Arena Leaderboard - where models battle it out in conversational prowess

Berkeley Function-Calling Leaderboard - assesses how well LLMs can understand and call for tools

MTEB Leaderboard - champion of text embedding evaluations

HHEM Leaderboard - keeping models honest in their summaries

But wait, there's more! This is just the first leg. Next week, we'll continue our expedition into the world of LLM leaderboards.

While current leaderboards offer valuable insights, there is still room for improvement. Expanding the scope of benchmarks, incorporating more diverse datasets, and addressing ethical considerations will be essential for the future of LLM evaluation. By working together, the AI community can develop even more robust and transparent leaderboards that drive progress in the field.

If you enjoyed this week’s story of The AI Notebook,

📥 Subscribe for free weekly insights and,

🔗 share with friends and colleagues who might find it valuable. Your support helps keep this newsletter brewing! ☕📚 See you next week!

☕ This month, I've been enjoying Unnaki Estate coffee from Blue Tokai Coffee Roasters. See you next week!

gem of an article